Crafting a Crawl-Friendly Website Architecture

Introduction: The Importance of a Crawl-Friendly Website

In the ever-evolving digital landscape, the success of a website largely depends on its ability to be easily discovered and indexed by search engine crawlers. A crawl-friendly website architecture not only enhances the visibility of your content but also ensures that search engines can efficiently navigate and understand your website, ultimately leading to better search engine optimization (SEO) and improved user experience.

Search engine crawlers, often referred to as "bots" or "spiders," are the backbone of modern search engines. These automated programs systematically explore and index the vast expanse of the internet, gathering information about websites and their content. A well-designed, crawl-friendly website architecture plays a crucial role in ensuring that these crawlers can effectively discover, understand, and index your web pages, ultimately improving your website's visibility and organic search rankings.

In this comprehensive article, we will delve into the principles and strategies of crafting a crawl-friendly website architecture. From optimizing URL structures and navigational elements to implementing effective content organization and technical SEO best practices, we will explore the key considerations for building a website that is easily navigable and indexable by search engine crawlers.

Understanding the Crawling Process

Before we dive into the specific tactics for crafting a crawl-friendly website, it's essential to understand the underlying process of how search engine crawlers operate.

The Crawling Lifecycle

The crawling process typically involves the following steps:

Discovery: Search engine crawlers begin by identifying entry points, often through links or sitemaps, to discover new pages and content on the web.

Extraction: Once a page is discovered, the crawler extracts the content, including text, images, and other media, as well as the various HTML elements and metadata.

Indexing: The extracted information is then processed and added to the search engine's index, which serves as a database of all the pages and content it has discovered.

Ranking: When a user performs a search, the search engine's algorithms analyze the indexed content and determine the most relevant and authoritative pages to display in the search results.

Understanding this crawling lifecycle is crucial for designing a website architecture that facilitates efficient and effective crawling, ultimately leading to improved visibility and rankings in search engine results.

Crawl Budget Considerations

Search engines have limited resources and must prioritize their crawling efforts. The concept of "crawl budget" refers to the amount of time and resources a search engine is willing to allocate to crawling a website. Factors that can influence a website's crawl budget include:

- Website Size: Larger websites with more pages generally receive a higher crawl budget than smaller websites.

- Content Freshness: Search engines tend to allocate more resources to crawling websites that frequently update their content.

- Website Authority: Websites with a stronger online presence and reputation generally receive higher crawl budgets.

- Technical Optimizations: Websites with a well-optimized architecture and minimal crawling obstacles are more likely to receive a higher crawl budget.

By understanding and optimizing for these crawl budget considerations, you can ensure that search engine crawlers allocate sufficient resources to efficiently explore and index your website's content.

Optimizing URL Structure for Crawlability

The URL structure of your website plays a crucial role in its crawl-friendliness. Well-structured URLs not only enhance the user experience but also help search engine crawlers better understand and navigate your website.

Implement a Clear and Logical URL Structure

Adopt a clear and logical URL structure that aligns with your website's content hierarchy and architecture. This includes:

-

Hierarchical Structure: Organize your URLs in a hierarchical manner, reflecting the overall structure of your website. For example,

example.com/products/category/product-name. - Descriptive and Keyword-Rich URLs: Use descriptive, keyword-rich phrases in your URLs to provide context about the page's content. Avoid using generic or irrelevant URL segments.

- Avoid Dynamically Generated URLs: Steer clear of dynamically generated URLs with long, complex, and hard-to-read parameters. Instead, opt for static, human-readable URLs.

Implement Canonical URLs

Canonical URLs help search engines understand the definitive version of a page, especially in cases where there are multiple variations or duplicate content. By using the canonical meta tag, you can specify the preferred URL for a page, which can help consolidate link equity and prevent duplicate content issues.

Use Hyphens for URL Separators

When creating URLs, use hyphens (-) to separate words, as opposed to underscores (_) or spaces. Hyphens are generally the preferred separator, as they are more widely recognized and supported by search engines.

Ensure URL Consistency

Maintain consistency in your URL structure across your website. Avoid mixing different URL formats or patterns, as this can confuse both users and search engine crawlers.

Implement Redirects Properly

If you need to change the URL of a page, implement 301 (permanent) redirects to ensure that search engines and users are directed to the new location. This helps maintain the page's ranking and preserves any existing link equity.

Optimizing Navigation for Crawlability

Effective navigation is crucial for both user experience and search engine crawlability. By optimizing your website's navigation, you can ensure that search engine crawlers can easily discover and access all the relevant content on your site.

Create a Clear and Intuitive Site Structure

Develop a clear and intuitive site structure that reflects the hierarchy and organization of your website's content. This helps users and search engine crawlers understand the relationships between different pages and navigate your website more effectively.

Implement a Comprehensive Navigation Menu

Ensure that your website's main navigation menu includes links to all the important sections and pages. This allows search engine crawlers to discover and index your content more efficiently.

Use Breadcrumb Navigation

Implement breadcrumb navigation, which displays the user's current location within the website's hierarchical structure. Breadcrumbs not only help users understand their position but also provide additional context for search engine crawlers.

Prioritize Internal Linking

Establish a robust internal linking structure by including relevant links between related pages on your website. This helps search engine crawlers discover and understand the connections between your content, allowing them to better index and rank your pages.

Avoid Excessive Pagination

While pagination can be useful for organizing content, excessive use of pagination can hinder search engine crawlers' ability to discover and index all the relevant pages on your website. Optimize pagination by limiting the number of paginated pages and ensuring that each page is easily accessible through your website's navigation.

Optimizing Content Organization for Crawlability

The way you organize and structure your website's content plays a crucial role in its crawlability. By implementing effective content organization strategies, you can ensure that search engine crawlers can efficiently navigate and understand your website's information.

Utilize Semantic Markup

Leverage semantic HTML markup to provide additional context and meaning to your content. This includes using appropriate heading tags (H1, H2, H3, etc.), as well as other semantic elements like <article>, <section>, and <nav>. Semantic markup helps search engines better comprehend the structure and hierarchy of your content.

Optimize Page Titles and Meta Descriptions

Ensure that your page titles and meta descriptions are well-crafted and accurately reflect the content of each page. This helps search engine crawlers understand the relevance and purpose of your web pages, which can positively impact their indexing and ranking.

Implement Structured Data Markup

Utilize structured data markup, such as Schema.org, to provide additional context and meaning to your content. This structured data can include information about your business, products, reviews, events, and more. Search engines can then use this structured data to enhance the display of your website in search results, improving its visibility and click-through rate.

Organize Content into Logical Sections

Group related content into logical sections and subsections, using appropriate heading tags (H1, H2, H3, etc.) to establish a clear content hierarchy. This helps search engine crawlers better understand the organization and relationships between different pieces of content on your website.

Optimize Image and Media Descriptions

Ensure that all images, videos, and other media on your website are properly described using alt text, captions, and other relevant metadata. This additional context helps search engine crawlers better understand the content and purpose of your multimedia assets.

Implementing Technical SEO Optimizations

Technical SEO plays a crucial role in ensuring the crawlability and indexability of your website. By addressing various technical aspects, you can enhance the overall performance and accessibility of your website, making it easier for search engine crawlers to explore and index your content.

Optimize the Website's Architecture

Ensure that your website's overall architecture is well-designed and scalable. This includes:

- Implementing a logical and intuitive URL structure

- Utilizing a responsive and mobile-friendly design

- Optimizing page load times and site speed

Implement a Robust Sitemap

Create and submit a comprehensive XML sitemap to search engines, which provides a structured list of all the pages on your website. This helps search engine crawlers discover and index your content more efficiently.

Ensure Proper Robots.txt Configuration

Configure your website's robots.txt file to provide instructions to search engine crawlers on which pages or directories they should or should not crawl. This can help optimize the crawl budget and prevent the indexing of unnecessary or sensitive content.

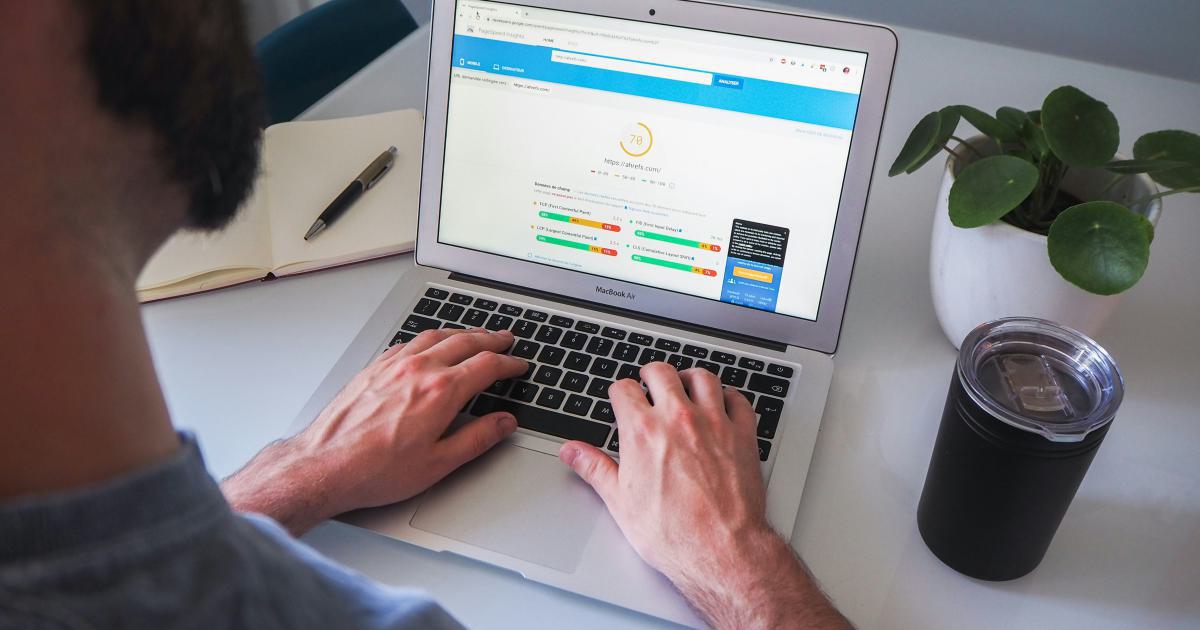

Leverage Google Search Console

Utilize Google Search Console to monitor your website's performance, identify any crawling or indexing issues, and receive valuable insights from Google about your website's visibility and performance in search results.

Optimize for Mobile-Friendliness

With the increasing prevalence of mobile device usage, it's crucial to ensure that your website is mobile-friendly. This includes implementing a responsive design, optimizing page speed for mobile, and addressing any mobile-specific SEO considerations.

Address Potential Crawling Obstacles

Identify and address any potential crawling obstacles on your website, such as:

- JavaScript-heavy pages that may be difficult for crawlers to interpret

- Content hidden behind login forms or paywalls

- Duplicate content issues

- Broken links or server errors

By addressing these technical SEO factors, you can create a more crawl-friendly website architecture and improve your overall search engine visibility.

Measuring and Monitoring Crawl Effectiveness

Continually monitoring and measuring the effectiveness of your website's crawlability is essential for maintaining and improving its search engine visibility over time. Here are some key metrics and tools to consider:

Crawl Rate and Crawl Depth

Monitor the rate at which search engine crawlers are visiting your website and the depth to which they are exploring your content. This can help you identify any issues with your website's crawlability and make necessary adjustments.

Indexed Pages

Regularly check the number of pages from your website that have been indexed by search engines. This metric can reveal whether search engines are able to discover and index your content effectively.

Crawl Error Reports

Utilize tools like Google Search Console to monitor and address any crawl errors or issues that search engine crawlers may encounter on your website. This can help you identify and resolve any technical problems that could be hindering the crawling process.

Website Performance Metrics

Monitor your website's overall performance metrics, such as page load times, mobile-friendliness, and Core Web Vitals. These factors can impact both user experience and search engine crawlability, so optimizing them can lead to improvements in your website's visibility and ranking.

Competitor Analysis

Analyze the crawl-friendliness and indexation of your competitors' websites. This can provide valuable insights into industry best practices and help you identify areas where you can improve your own website's crawlability.

By regularly monitoring and measuring these key metrics, you can continuously optimize your website's crawl-friendliness and ensure that search engine crawlers can efficiently discover, understand, and index your content.

Conclusion: Maintaining a Crawl-Friendly Website

Crafting a crawl-friendly website architecture is an ongoing process that requires a strategic and holistic approach. By implementing the principles and strategies outlined in this article, you can create a website that is easily navigable and indexable by search engine crawlers, ultimately improving your website's visibility and driving more qualified traffic to your online presence.

Remember, a crawl-friendly website is not just about technical optimizations - it's about creating a user-friendly, well-organized, and accessible platform that search engines can efficiently explore and understand. By continuously monitoring, measuring, and optimizing your website's crawlability, you can stay ahead of the curve and ensure that your content remains easily discoverable by your target audience.