Handling Crawl Errors: A Comprehensive Guide

Introduction: Understanding Crawl Errors

Crawl errors are a common issue that website owners and SEO professionals face when trying to optimize their websites for search engine visibility. These errors occur when search engine bots, known as "crawlers," are unable to access or properly index certain pages on a website. Crawl errors can have a significant impact on a website's search engine rankings, user experience, and overall performance.

In this comprehensive guide, we will explore the different types of crawl errors, their causes, and the best strategies for identifying, diagnosing, and resolving them. We will also discuss the importance of regular crawl error monitoring and prevention, as well as the long-term benefits of maintaining a healthy website structure.

Types of Crawl Errors

Crawl errors can manifest in various forms, each with its own unique implications for website performance and search engine optimization. Let's dive into the most common types of crawl errors:

1. 4xx Client Errors

4xx client errors indicate that the issue lies with the user's request or the website's configuration. These errors are typically caused by broken links, missing pages, or redirects that are not properly set up.

The most common 4xx client errors include:

- 404 Not Found: This error occurs when a requested page or resource is not available on the website.

- 403 Forbidden: This error indicates that the user or crawler does not have permission to access the requested resource.

- 410 Gone: This error signifies that the requested resource has been permanently removed from the website.

2. 5xx Server Errors

5xx server errors indicate that the issue is on the server-side, either with the website's infrastructure or the application running on the server. These errors can be caused by server overload, software bugs, or misconfigured server settings.

The most common 5xx server errors include:

- 500 Internal Server Error: This error occurs when the server encounters an unexpected condition that prevents it from fulfilling the request.

- 503 Service Unavailable: This error indicates that the server is temporarily unable to handle the request, often due to maintenance or high traffic.

3. Soft 404 Errors

Soft 404 errors occur when a page returns a 200 OK HTTP status code, but the content on the page suggests that the page is not the intended destination. This can happen when a search engine crawler encounters a page that is not the expected content, such as a "Page Not Found" message or an empty page.

4. Blocked by Robots.txt

This error occurs when a search engine crawler is prevented from accessing a page due to the website's robots.txt file. The robots.txt file is used to instruct search engine crawlers on which pages they are allowed to crawl and index.

5. Crawl Anomalies

Crawl anomalies are instances where the search engine crawler encounters unexpected behavior or data on the website, such as redirects, canonicalization issues, or JavaScript rendering problems.

Identifying and Diagnosing Crawl Errors

To effectively address crawl errors, website owners and SEO professionals must first identify and diagnose the underlying issues. Here are the key steps in the crawl error identification and diagnosis process:

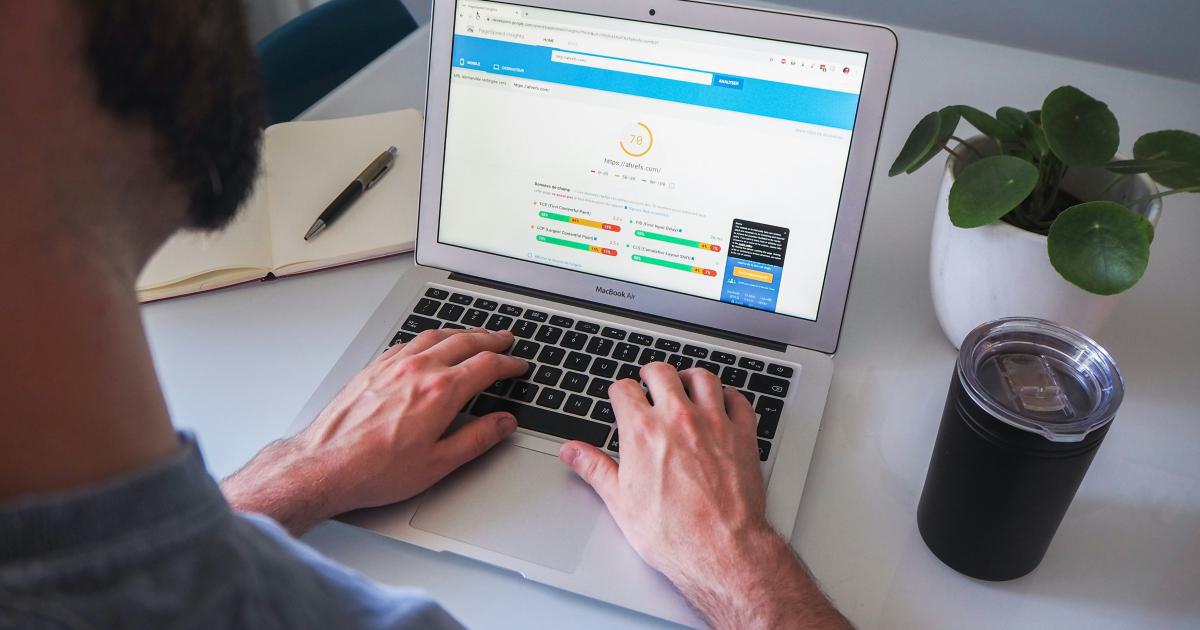

1. Utilize Webmaster Tools and Search Console

Google Search Console (previously known as Google Webmaster Tools) is a powerful tool for monitoring and managing your website's search engine performance. It provides detailed reports on crawl errors, allowing you to identify and address issues quickly.

2. Leverage Site Crawling Tools

In addition to Search Console, there are various third-party site crawling tools available, such as Screaming Frog, Ahrefs, and Semrush. These tools can provide a comprehensive overview of your website's crawl errors, as well as additional insights and data that can aid in the diagnosis process.

3. Analyze Server Logs

Server logs can provide valuable information about the interactions between search engine crawlers and your website. By analyzing these logs, you can gain a deeper understanding of the specific crawl errors and their potential causes.

4. Conduct Manual Checks

Complementing the automated tools, it's essential to perform manual checks on your website to identify any additional crawl errors or issues that may not be detected by the tools. This can include manually browsing your website, checking for broken links, and reviewing the website's technical infrastructure.

Resolving Crawl Errors

Once you have identified and diagnosed the crawl errors affecting your website, it's time to take action and resolve them. Here are the key steps in the crawl error resolution process:

1. Fix 4xx Client Errors

To address 4xx client errors, you'll need to identify the root cause and take appropriate actions. This may involve:

- Fixing broken links and redirecting users to the correct pages

- Ensuring that pages are not missing or deleted without proper redirects

- Verifying that the website's permissions and access controls are configured correctly

2. Resolve 5xx Server Errors

5xx server errors require a closer look at the website's server infrastructure and application settings. Potential solutions may include:

- Optimizing server resources and configurations to prevent overloads

- Identifying and addressing software bugs or issues within the website's application

- Ensuring that the website's hosting environment is stable and reliable

3. Eliminate Soft 404 Errors

To address soft 404 errors, you can:

- Improve the content and structure of the affected pages to ensure they provide the expected information

- Implement proper redirects or 404 pages to guide users and search engines to the correct content

- Enhance the website's information architecture and navigation to minimize the occurrence of soft 404 errors

4. Manage Robots.txt Blocking

If certain pages are being blocked by the website's robots.txt file, review the rules and ensure that they are up-to-date and correctly configured. This may involve:

- Allowing search engine crawlers to access important pages

- Disallowing crawling of irrelevant or sensitive pages

- Ensuring that the robots.txt file is correctly formatted and hosted on the website

5. Address Crawl Anomalies

Crawl anomalies can be more complex to diagnose and resolve, as they often involve technical issues with the website's structure, content, or rendering. Potential solutions may include:

- Optimizing the website's JavaScript and other dynamic content

- Ensuring proper canonicalization and URL structure

- Addressing any underlying technical issues or bugs within the website's codebase

Ongoing Monitoring and Prevention

Effectively managing crawl errors is an ongoing process that requires continuous monitoring and proactive prevention strategies. Here are some best practices to maintain a healthy website and avoid crawl errors:

1. Implement Regular Crawl Error Monitoring

Regularly monitor your website's crawl errors using tools like Google Search Console, Screaming Frog, and other site crawling solutions. This will help you identify and address issues as they arise, preventing them from escalating.

2. Automate Crawl Error Reporting

Set up automated alerts and reports to notify you of any new crawl errors detected on your website. This will allow you to address issues promptly and prevent them from impacting your website's search engine visibility and performance.

3. Maintain a Robust Redirect Strategy

Ensure that any pages that are moved, deleted, or restructured on your website have proper 301 or 302 redirects in place. This will help mitigate the impact of crawl errors and maintain a seamless user experience.

4. Optimize Website Structure and Navigation

Regularly review and refine your website's information architecture, content structure, and navigation to minimize the risk of crawl errors. This includes ensuring that all pages are logically connected, internal linking is optimized, and there are no orphaned or isolated pages.

5. Stay Vigilant with Technical Website Audits

Conduct periodic technical audits of your website to identify and address any underlying technical issues that could lead to crawl errors. This may include evaluating the website's code, server configurations, and overall infrastructure.

Conclusion: The Importance of Crawl Error Management

Effectively managing crawl errors is a critical component of maintaining a healthy, search engine-optimized website. By understanding the different types of crawl errors, implementing a robust identification and diagnosis process, and adopting proven resolution strategies, website owners and SEO professionals can ensure that their websites are easily accessible and properly indexed by search engines.

Continuous monitoring, proactive prevention, and a commitment to technical excellence are the keys to maintaining a website that consistently delivers an optimal user experience and strong search engine visibility. By embracing the strategies outlined in this comprehensive guide, you can elevate your website's performance and stay ahead of the competition in the ever-evolving world of digital marketing.

Are You Crushing It in Internet Marketing?

Struggling to boost your online visibility and traffic? Semrush is the ultimate platform for digital marketers like you. With powerful SEO tools and competitive data insights, you can optimize your website, content, and campaigns for maximum impact.

Join over 7 million marketers already using Semrush to outrank their competitors, drive more qualified leads, and grow their businesses online. Get started today with a 7-day free trial, and unlock the full potential of your internet marketing strategy.

Unlock the Power of SEO with Semrush

Are you struggling to boost your online visibility and drive more traffic to your website? Semrush has the solution.

Our comprehensive platform offers advanced keyword research, competitor analysis, and SEO audits, empowering you to optimize your content and outrank your competition.