Taming the Beast: Crawling JavaScript-Heavy Websites

Understanding the Challenge

As the web evolves, websites have become increasingly reliant on JavaScript to deliver dynamic, interactive, and feature-rich experiences. While this advancement has brought about remarkable improvements in user engagement, it has also presented a unique challenge for web crawlers and search engines tasked with indexing and understanding these JavaScript-heavy websites.

Crawling JavaScript-driven websites is a complex task, as traditional web crawlers often struggle to fully render and parse the content hidden behind layers of dynamic code. This can lead to incomplete or inaccurate indexing, which can significantly impact a website's visibility and searchability on the internet.

In this comprehensive article, we will delve into the intricacies of crawling JavaScript-heavy websites, exploring the obstacles, the evolving solutions, and the best practices that can help you tame this "beast" and ensure your website's content is effectively indexed and discovered by search engines.

The Rise of JavaScript-Powered Websites

The widespread adoption of JavaScript has transformed the web landscape, enabling developers to create more engaging, interactive, and dynamic user experiences. From single-page applications (SPAs) to complex e-commerce platforms, JavaScript has become an essential component of modern web development.

The benefits of JavaScript-powered websites are numerous:

Enhanced User Experience: JavaScript allows for smooth, responsive, and interactive user interfaces, improving overall user engagement and satisfaction.

Improved Performance: Techniques like lazy loading, code splitting, and client-side rendering can significantly enhance website performance, especially for content-heavy pages.

Increased Interactivity: JavaScript enables the creation of dynamic features, such as drop-down menus, form validations, and real-time updates, which enhance the user's experience.

Simplified Development: The rise of JavaScript frameworks and libraries, such as React, Angular, and Vue.js, has simplified the development process, allowing for more efficient and scalable web applications.

However, this shift towards JavaScript-heavy websites has also introduced challenges for search engines and web crawlers, which have traditionally relied on static HTML content to understand and index web pages.

The Challenges of Crawling JavaScript-Heavy Websites

Traditional web crawlers, such as those used by search engines like Google, operate by following links, parsing HTML content, and extracting relevant information to build their index. This approach works well for websites that primarily use server-rendered HTML, but it often falls short when it comes to JavaScript-powered websites.

The main challenges in crawling JavaScript-heavy websites include:

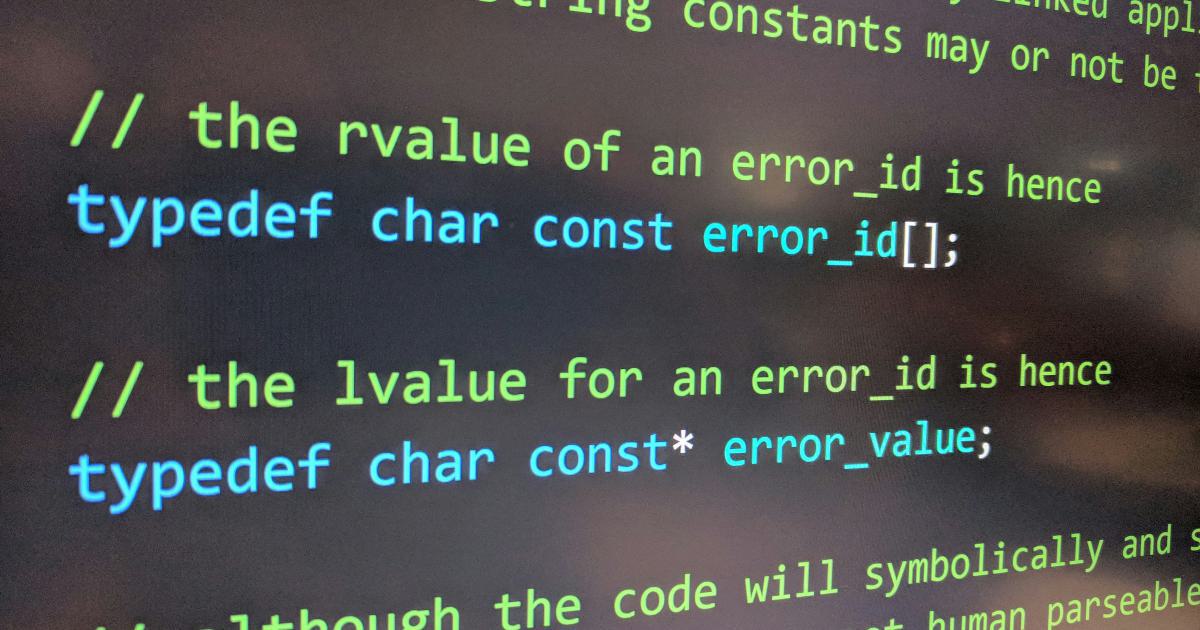

1. Rendering and Parsing JavaScript

Web crawlers typically have limited JavaScript rendering capabilities, often only able to execute a basic set of JavaScript commands. This means they may not be able to fully render and parse the content that is dynamically generated or loaded by JavaScript, leading to incomplete or inaccurate indexing.

2. Asynchronous Content Loading

Many JavaScript-powered websites load content asynchronously, using techniques like AJAX or dynamic imports. This can cause issues for web crawlers, as they may not be able to detect and index the content that is loaded after the initial page load.

3. Complex Navigation and Interactions

JavaScript-heavy websites often feature complex navigation structures, interactive elements, and single-page application (SPA) architectures. Traditional web crawlers may struggle to navigate and understand these intricate user interactions, which are essential for comprehensive indexing.

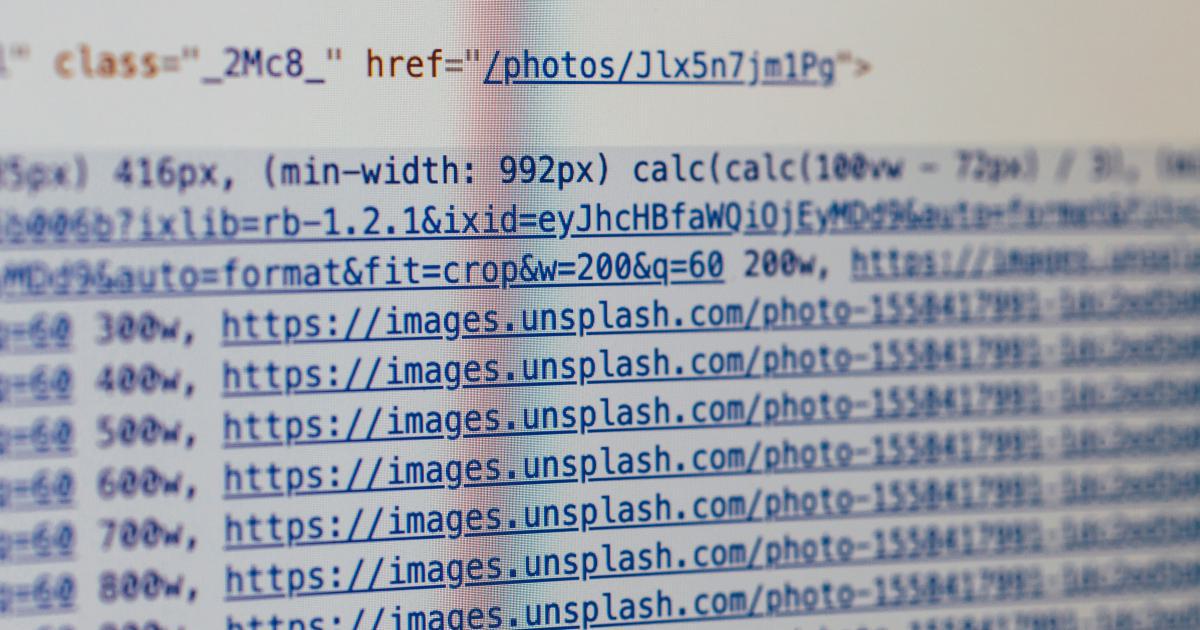

4. JavaScript-Driven URLs and Links

In some cases, JavaScript is used to generate or manipulate URLs and links, making it difficult for web crawlers to follow and index the relevant content.

5. Excessive Use of JavaScript

While JavaScript can enhance user experiences, excessive use of the language can negatively impact website performance and make it more challenging for web crawlers to efficiently process the content.

These challenges highlight the need for a more sophisticated approach to crawling and indexing JavaScript-heavy websites, ensuring that search engines can accurately understand and surface the relevant content to users.

Evolving Solutions for Crawling JavaScript-Heavy Websites

To address the challenges posed by JavaScript-powered websites, search engines and web crawlers have been evolving their capabilities, incorporating various techniques and technologies to improve their ability to render, parse, and index dynamic content.

1. Headless Browsers

One of the most effective solutions for crawling JavaScript-heavy websites is the use of headless browsers. Headless browsers, such as Puppeteer, Selenium, or Chromium, are browser instances that can be controlled programmatically, allowing web crawlers to render and interact with JavaScript-driven content in a more comprehensive manner.

By employing headless browsers, web crawlers can now navigate through complex user interactions, execute JavaScript code, and extract the fully rendered content, ensuring a more accurate indexing process.

2. Prerendering and Server-Side Rendering

Another approach to addressing the challenges of JavaScript-heavy websites is prerendering or server-side rendering (SSR). In these techniques, the website's content is pre-rendered on the server, generating static HTML that can be more easily crawled and indexed by search engines.

Prerendering and SSR can help ensure that the content is accessible and understandable to web crawlers, while still allowing for the benefits of client-side JavaScript to enhance the user experience.

3. Progressive Web Apps (PWAs)

Progressive Web Apps (PWAs) are a modern web application architecture that combines the best of web and mobile experiences. PWAs are designed to provide a seamless, app-like experience while still being accessible to web crawlers, as they use a combination of server-side rendering and client-side JavaScript.

By leveraging PWA techniques, such as service workers and app-like features, websites can maintain their JavaScript-powered interactivity while ensuring that essential content is still accessible to web crawlers.

4. Improved Crawling Capabilities

Search engines and web crawlers have also been continuously improving their own capabilities to better handle JavaScript-heavy websites. Google, for example, has enhanced its Googlebot crawler to execute JavaScript more effectively, allowing it to render and index a wider range of dynamic content.

These advancements in crawling capabilities, combined with the evolving solutions mentioned above, have made it easier for search engines to accurately index and understand JavaScript-powered websites, improving their visibility and discoverability in search results.

Best Practices for Crawling JavaScript-Heavy Websites

To ensure that your JavaScript-heavy website is effectively crawled and indexed by search engines, it's important to follow a set of best practices. These practices can help you optimize your website's structure, content, and technical implementation, making it more accessible and understandable for web crawlers.

1. Utilize Server-Side Rendering (SSR) or Prerendering

Implementing server-side rendering (SSR) or prerendering can significantly improve the crawlability of your JavaScript-heavy website. By rendering the initial content on the server and serving it as static HTML, you can ensure that the essential content is easily accessible to web crawlers.

2. Optimize for Progressive Web App (PWA) Architecture

Adopting a Progressive Web App (PWA) architecture can help you strike a balance between providing a rich, interactive user experience and ensuring that your content is crawlable and indexable by search engines. PWAs leverage a combination of server-side rendering and client-side JavaScript to deliver the best of both worlds.

3. Implement Effective Fallbacks and Alternatives

While the evolving solutions mentioned earlier have greatly improved the ability to crawl JavaScript-heavy websites, it's still important to provide fallbacks and alternatives for web crawlers that may not be able to fully execute JavaScript. This can include:

- Offering a basic, server-rendered HTML version of your website's content

- Ensuring that key content and links are also available in plain HTML format

- Providing clear and descriptive alt text for images and other visual elements

4. Monitor and Validate Crawling Efforts

Regularly monitor your website's crawling and indexing performance to identify any issues or bottlenecks. Tools like Google Search Console, Bing Webmaster Tools, and third-party SEO platforms can provide valuable insights into how search engines are interacting with your JavaScript-heavy website.

5. Optimize JavaScript Usage and Performance

While JavaScript is a powerful tool, excessive or inefficient use of the language can negatively impact your website's crawlability and performance. Optimize your JavaScript usage by:

- Minimizing the amount of JavaScript required for essential content and functionality

- Implementing code splitting and lazy loading techniques to reduce the initial JavaScript payload

- Optimizing JavaScript file sizes, minifying code, and leveraging browser caching

By following these best practices, you can effectively tame the "beast" of crawling JavaScript-heavy websites, ensuring that your content is properly indexed and discoverable by search engines, ultimately improving your website's visibility and driving more traffic to your online presence.

Conclusion

The rise of JavaScript-powered websites has transformed the web landscape, delivering more engaging and dynamic user experiences. However, this shift has also introduced challenges for traditional web crawlers, which struggle to fully render and parse the content hidden behind layers of JavaScript.

To address these challenges, search engines and web crawlers have evolved, incorporating advanced techniques like headless browsers, prerendering, and server-side rendering. Additionally, the emergence of Progressive Web Apps (PWAs) has provided a balanced approach, allowing for rich client-side experiences while ensuring essential content is accessible to web crawlers.

By following the best practices outlined in this article, including leveraging server-side rendering, optimizing for PWA architecture, implementing fallbacks, and monitoring crawling performance, you can effectively tame the "beast" of crawling JavaScript-heavy websites, ensuring your content is properly indexed and discovered by search engines.

As the web continues to evolve, the importance of addressing the challenges posed by JavaScript-powered websites will only grow. By staying ahead of the curve and adopting the right strategies, you can ensure your website remains visible, searchable, and accessible to both users and search engines, unlocking its full potential in the dynamic and ever-changing digital landscape.