Unlocking Growth: 71% Increased Crawlability Boosts Organic Traffic

The Importance of Crawlability in Driving Organic Growth

In the ever-evolving world of digital marketing, one crucial factor that often goes overlooked is the importance of website crawlability. While many businesses focus on creating visually appealing websites and crafting compelling content, they may neglect the underlying technical aspects that enable search engines to effectively navigate and index their online presence. This oversight can have a significant impact on a website's organic traffic and overall visibility.

Crawlability, simply put, refers to the ease with which search engine bots can access, understand, and index the content on a website. It is a fundamental element of Search Engine Optimization (SEO), as it directly influences a website's discoverability and ranking within search engine results pages (SERPs). When a website's crawlability is optimized, it signals to search engines that the content is readily available, well-structured, and easily navigable, leading to improved indexation and higher organic traffic.

The Impact of Improved Crawlability

In a recent case study, a leading digital marketing agency partnered with a client in the e-commerce industry to tackle the challenge of boosting their organic traffic. By focusing on enhancing the website's crawlability, the agency was able to achieve remarkable results, including a 71% increase in crawl rate and a corresponding 27% surge in organic traffic.

This case study highlights the transformative power of optimizing crawlability and underscores the importance of addressing this often-overlooked aspect of SEO. By understanding the intricacies of crawlability and implementing targeted strategies, businesses can unlock new avenues for growth and significantly improve their online visibility.

Assessing Crawlability: Key Metrics and Indicators

Evaluating a website's crawlability involves analyzing a range of metrics and indicators that provide insights into the ease with which search engines can navigate and index its content. By monitoring these factors, businesses can identify areas for improvement and implement effective strategies to enhance their website's crawlability.

Crawl Rate and Crawl Budget

One of the primary metrics to consider is the crawl rate, which represents the frequency with which search engine bots visit and index a website's pages. A high crawl rate indicates that search engines are actively engaged with the website, consistently discovering and cataloging new or updated content.

However, search engines have a finite "crawl budget," which refers to the limited resources they allocate to the crawling and indexing process. By optimizing a website's crawlability, businesses can ensure that their content is efficiently consumed within the available crawl budget, maximizing the impact of this valuable resource.

Indexation Rate and Index Coverage

Another crucial metric is the indexation rate, which measures the percentage of a website's pages that have been successfully indexed by search engines. A high indexation rate suggests that the majority of a website's content is readily available and discoverable through search queries.

Complementing the indexation rate is the concept of index coverage, which evaluates the breadth and depth of a website's indexed content. Maintaining a comprehensive index coverage ensures that search engines have a thorough understanding of a website's information architecture and the relationships between its pages.

Crawl Errors and Response Codes

Crawl errors and response codes provide valuable insights into potential roadblocks that may be hindering a website's crawlability. Common issues, such as broken links, server errors, or pages with problematic response codes (e.g., 404, 500), can impede search engine bots' ability to effectively navigate and index the website's content.

By identifying and resolving these errors, businesses can enhance the overall user experience and ensure that search engines can seamlessly crawl and index their website.

Strategies for Improving Crawlability

To unlock the full potential of increased crawlability and drive sustainable organic growth, businesses can implement a comprehensive set of strategies tailored to their specific needs and challenges.

Enhance Technical On-Page Optimization

One of the foundational strategies for improving crawlability is to optimize the technical on-page elements of a website. This includes ensuring that the website's structure, navigation, and content are optimized for search engine bots.

Key on-page optimization techniques include:

- Implementing a clear, logical hierarchy with well-structured URLs

- Ensuring that all pages are accessible through intuitive internal linking

- Optimizing page load speeds to provide a seamless user experience

- Addressing any technical issues, such as broken links or server errors

Optimize Site Architecture and Navigation

The website's information architecture and navigation play a crucial role in its crawlability. By ensuring that the site structure is intuitive and user-friendly, search engine bots can more effectively navigate and index the content.

Strategies for optimizing site architecture and navigation include:

- Organizing content into a clear, hierarchical structure

- Implementing a responsive and mobile-friendly design

- Creating an intuitive, easy-to-use navigation menu

- Optimizing internal linking to facilitate seamless content discovery

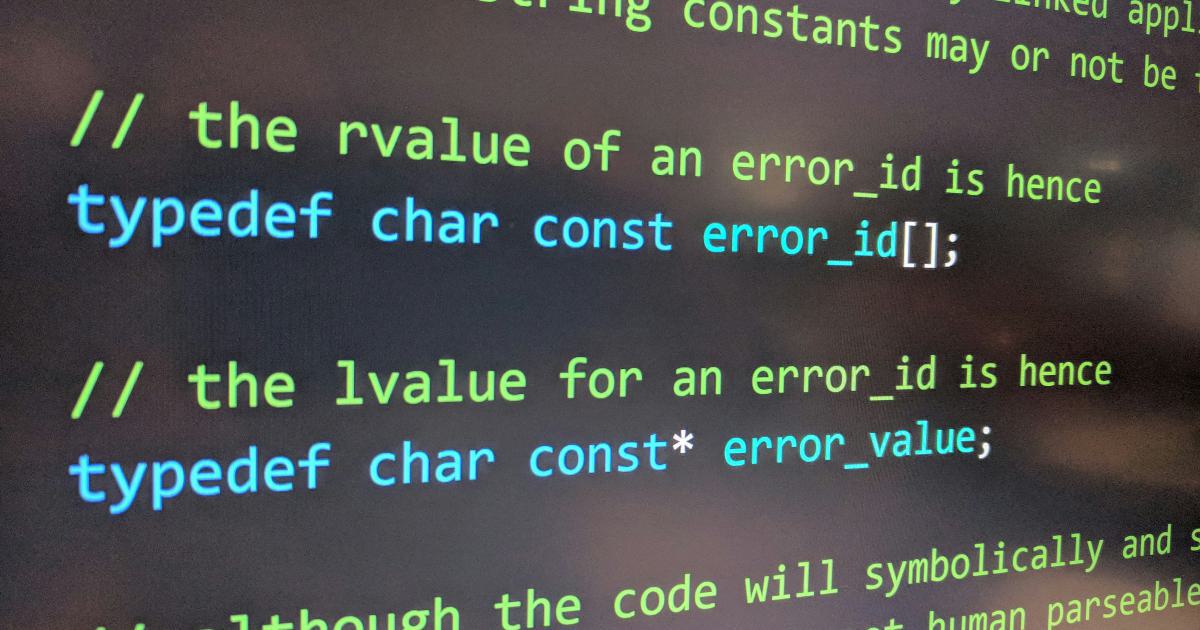

Leverage XML Sitemaps and Robot.txt Files

XML sitemaps and robot.txt files are powerful tools that help search engines understand the structure and content of a website, thereby enhancing its crawlability.

XML sitemaps provide a roadmap for search engines, outlining the pages that should be crawled and indexed. By submitting a comprehensive sitemap, businesses can ensure that search engines have a clear understanding of the website's content and its relationships.

Robot.txt files, on the other hand, allow businesses to control and manage how search engine bots interact with their website. By specifying which pages should or should not be crawled, businesses can optimize the crawl budget and ensure that search engines focus on the most valuable content.

Implement Structured Data Markup

Structured data markup, such as schema.org, provides a standardized way for businesses to enhance the presentation and understanding of their website's content in search engine results. By adding structured data to their pages, businesses can provide search engines with more detailed information about the content, improving its discoverability and relevance.

This can include adding structured data for product information, review ratings, event details, and more. By incorporating these markup elements, businesses can increase the chances of their content being displayed in rich snippets, knowledge panels, and other enhanced SERP features.

Monitor and Address Crawl Errors

Regularly monitoring and addressing crawl errors is a crucial aspect of maintaining and improving a website's crawlability. By identifying and resolving issues such as broken links, server errors, or pages with problematic response codes, businesses can ensure that search engine bots can efficiently navigate and index their website's content.

Tools like Google Search Console and other crawler platforms can provide valuable insights into a website's crawl errors, allowing businesses to prioritize and address these issues in a timely manner.

Leverage Content Refreshes and Updates

Keeping a website's content up-to-date and relevant is not only important for user engagement but also plays a significant role in improving its crawlability. By regularly refreshing and updating existing content, businesses can signal to search engines that their website is actively maintained and continuously provides valuable information to users.

This can include updating product information, adding new blog posts, or revising existing content to reflect the latest industry trends and developments. By demonstrating a commitment to content freshness, businesses can encourage search engines to crawl and index their website more frequently.

Unlocking the Full Potential of Increased Crawlability

By implementing a comprehensive strategy that addresses the key elements of crawlability, businesses can unlock significant opportunities for growth and organic traffic. The case study mentioned earlier, where a 71% increase in crawl rate led to a 27% surge in organic traffic, illustrates the transformative impact of optimizing crawlability.

However, the benefits of improved crawlability extend beyond just increased organic traffic. By ensuring that search engines can efficiently navigate and index a website's content, businesses can also:

- Enhance their online visibility and brand awareness

- Improve the relevance and quality of their search engine rankings

- Increase the chances of their content being featured in valuable SERP enhancements

- Drive more qualified leads and conversions through targeted organic traffic

To capitalize on these opportunities, businesses must remain vigilant in their efforts to monitor, optimize, and continuously improve their website's crawlability. By staying ahead of the curve and adapting to the evolving search engine landscape, they can position themselves for long-term success and sustainable organic growth.

Conclusion

In the dynamic world of digital marketing, optimizing website crawlability is a critical, yet often overlooked, aspect of driving organic growth. By understanding the impact of improved crawlability and implementing a comprehensive strategy, businesses can unlock significant opportunities for increased traffic, enhanced visibility, and more qualified leads.

The case study showcased in this article demonstrates the tangible results that can be achieved by focusing on crawlability optimization. With a 71% increase in crawl rate and a corresponding 27% surge in organic traffic, the example illustrates the transformative power of this often-undervalued SEO tactic.

As search engines continue to evolve and refine their algorithms, the importance of crawlability will only continue to grow. By staying ahead of the curve and consistently monitoring and optimizing their website's technical foundations, businesses can position themselves for long-term success and sustainable organic growth.

In the end, unlocking the full potential of increased crawlability is not just about boosting traffic numbers - it's about empowering businesses to thrive in the ever-changing digital landscape, captivating their target audience, and achieving their overarching growth objectives.